Machine learning on a budget, Part 2

Hit the ground running!

by Dr. Tomás Silveira Salles

6 minute read

About the author

Dr. Tomás Silveira Salles

... writes about the most recent IT trends in layman's terms and tries to shed some light on the darkness beyond hype and buzzwords. You can ask him questions through the contact form at the bottom of this article.

Welcome back to our short guide to machine learning and data collection. As we discussed in part 1, collecting training data for machine learning algorithms can be a huge obstacle, especially for startups and small businesses.

While in the first part we concentrated on the different training styles and methods that can be used, this time we are mainly interested in the ways a product can apply machine learning, and how a business model can be designed to take the difficulty of obtaining data into account.

All three ideas explored below revolve around the same base concept: Starting with a good but imperfect product and incrementally perfecting it with the market's help. Let's dive into it!

Accuracy doesn't come linear. If 95% can be reached with 100k datapoints, it might take a million to reach 96%!

The relation between the amount of training data used and the resulting accuracy of the trained machine is far from linear. If you can achieve 95% accuracy using 100 thousand labelled data points, it might take 100 thousand more to get to 96% accuracy. So that 1% improvement could double the costs of obtaining the data, as well as the time needed to collect it and the time needed to train the machine, but will it double your profits?

I'd say it strongly depends on what you're selling. If you're offering a pioneering, innovative solution, it might be wise to launch your product with 95% accuracy, make your brand internationally well-known, close some deals with new investors, and use the profits to clear the costs of the initial developing phase. Then you can take your time (and use your new resources) to collect more data, reach 96% accuracy and start selling version 2.0 of your already successful and trusted product.

On the other hand, if someone else has beaten you to market, you might need that extra 1% accuracy to make any profits at all. That is, if your product is not clearly and objectively better than the one people already know, most buyers will opt for the familiar version.

The message here is this: Get some experts to analyse what impact the next batch of labelled data points would have, and whether it wouldn't be easier and cheaper to make improvements through changes in the algorithm. Instead of gaining 1% improvement by doubling the training dataset, you might be able to gain 3% accuracy without acquiring any new data, simply by tweaking a few parameters in the algorithm.

A setup where the machine helps you with your task is way easier to achieve than one where you need to completely rely on it

As I mentioned in part 1, most machine learning algorithms calculate, for a given input, a value that's somehow in-between the various valid outputs, and then choose and return the valid output that is closest to this intermediate value. For example, when a machine is trying to figure out whether a JPEG is a picture of a cat or not, it might internally return "this has a probability of 45% of being a picture of a cat, and 55% of not being a picture of a cat". But because the algorithm is only allowed to return one of the two values "cat" and "no cat", it chooses the one that seems more likely (in this case "no cat") without revealing that it was highly insecure. It doesn't have to be this way though!

If you want, the machine can be programmed to be honest when it's in doubt. That is, in cases where the chosen output is not a clear winner, the machine can output something like "this seems not to be a picture of a cat, but I'm not so sure... the difference in probability was only 10%". With this approach, the machine is not entirely autonomous, but still very helpful.

Suppose some unlucky person had the job of classifying 4000 pictures a day into the categories "cat" and "no cat". I'm sure they'd be very glad to use a program that can take care of 3900 of them on its own, asking for a double-check only for the 100 remaining pictures. However, if the program tried to classify those 100 trickier images without help, it could make unacceptable mistakes.

Moreover, if the task is to classify pictures into not only 2 but rather thousands of different categories, even when the machine is uncertain it can still narrow down the possibilities. For example, it could say "I'm not sure exactly what's in this picture. It's definitely some kind of mushroom, but I don't know which species", and then the query for human input could be automatically directed at the mushroom-classification-department of your company.

In short: In some applications, the lower accuracy of a 100% autonomous machine could be a deal-breaker, while the machine with lower autonomy but which guarantees 100% accuracy might be welcome. Depending on the product you're selling, this hybrid version could be a profitable idea that requires much less labelled data.

The hybrid algorithm can also be combined with active learning: Each time the machine is uncertain how to classify a given input and it has to ask a human for help, the decision made by the human can serve as the label for a new training data point, which can be used to improve the machine's accuracy and confidence. This improvement doesn't have to be local (e.g. on the user's phone). The new data point can be sent to a central database so that all users can profit from it with the next update. This way, instead of promising your clients that every update will bring more accuracy, you promise them that every update will make the machines more autonomous, requiring less and less human input.

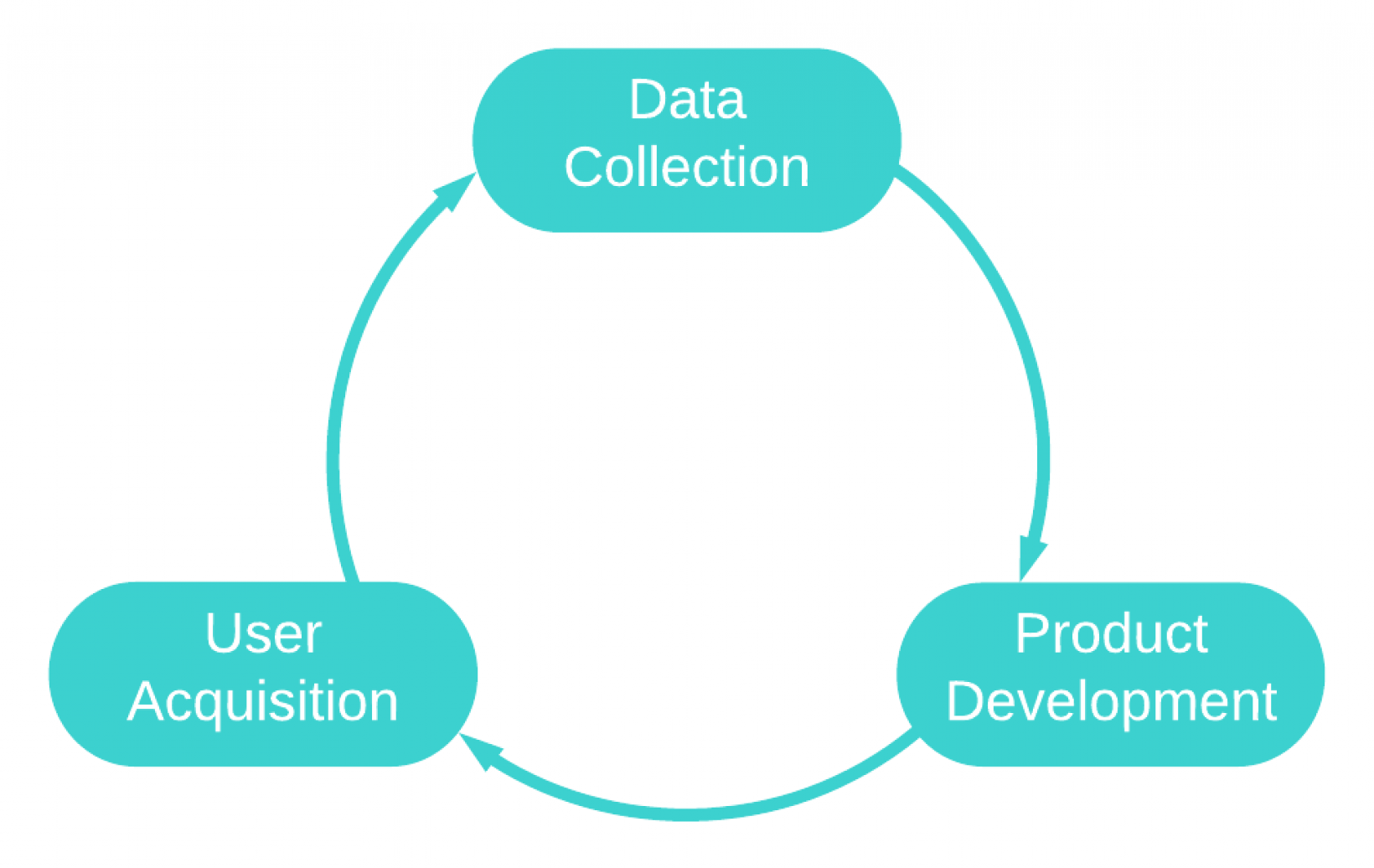

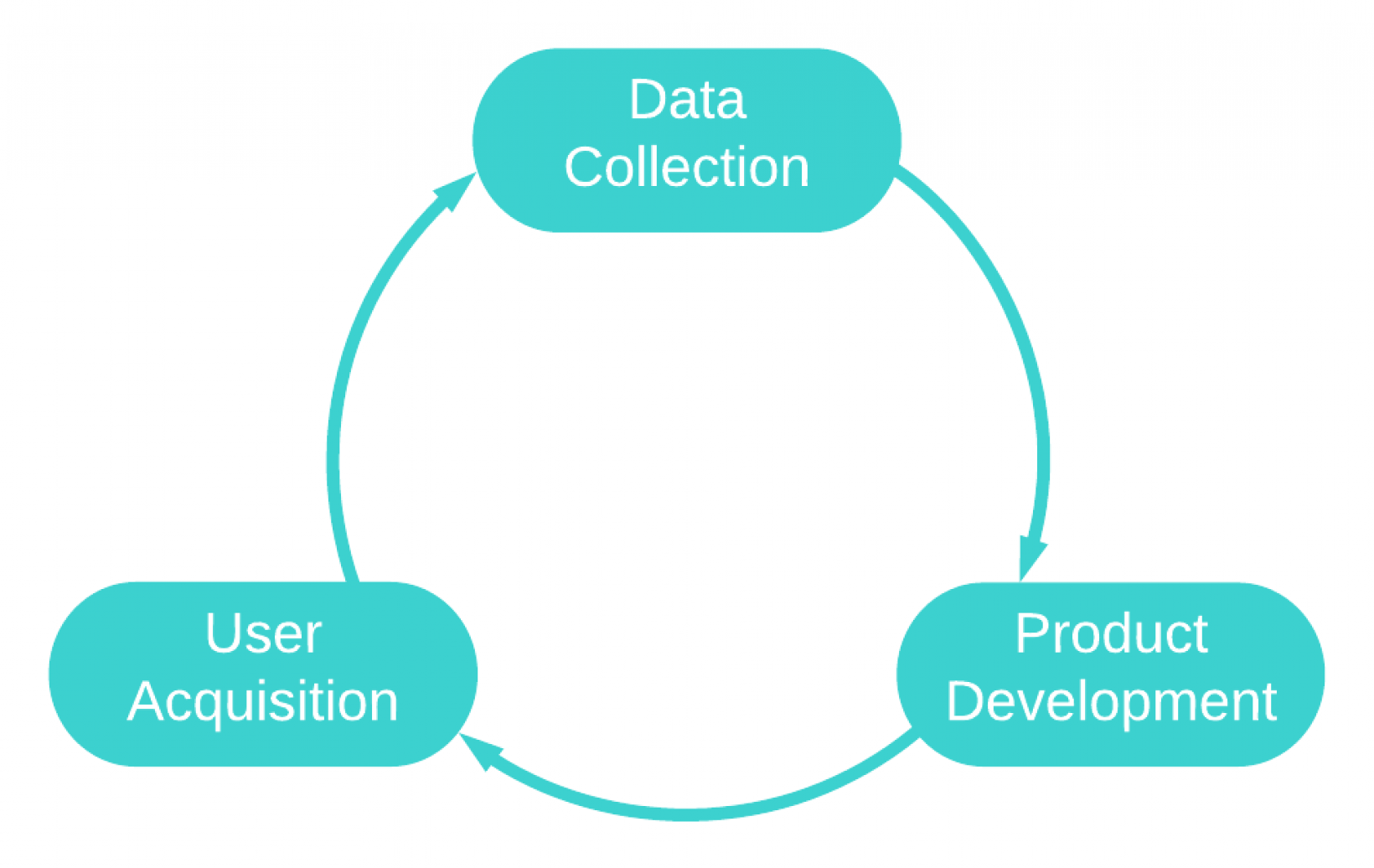

Indeed, it's not my idea. In his lectures and talks, Professor Andrew Ng defends the idea of using your clients as part of a positive feedback loop consisting of data collection, product development and user acquisition.

The iterative process of improving your AI's capabilities by help of others

In short, you start by collecting as much training data as you can. It might not suffice to make the product you were dreaming of, but maybe it suffices to make a sellable product. Once you have a product, you can acquire your first few users, and as your users apply the product to real-world cases, you obtain more data. This might be unlabelled data if you simply tell the algorithm to send every input to a central server to be stored, or it might be labelled data if you're using a hybrid approach as described in the previous section.

If you're getting new labelled data from your clients, you can directly use it to train the machines further. On the other hand, if you're receiving unlabelled data points, you can either get them labelled (depending on the costs) or use them directly via semi-supervised learning. In any case, the new data points can help you develop a better product, which will get you more users, who will provide even more data, and so on.

When some of Professor Ng's students in Stanford took his advice, they created a company called Blue River Technology, and a product which used machine learning in agriculture. By fixing cameras onto tractors, their program could look at the plants and decide which ones were weeds (to be eliminated with pesticide) and which ones were part of the crop (to be nurtured with fertiliser). This reduced the costs from both pesticide and fertiliser, and also reduced the amount of pesticide that came into contact with the crop.

They started the loop by visiting crops and taking pictures manually, thus obtaining a very small database and through a relatively large amount of work. But their business grew so much after only a few iterations of the cycle, that it was bought by John Deere for a whopping 305 million dollars.

If you have a million-dollar idea that involves machine learning in an innovative and meaningful way, I hope by now you're out of excuses not to implement it. At least excuses concerning the collection and labelling of training data.

Combining the methods we saw in part 1 and the strategies described here, the success of your product ends up depending less on your financial resources and more on your effort, creativity, product demand, marketing, design, luck... the usual suspects. There are many areas in which small businesses can get overwhelmed competing against the tech giants, but machine learning doesn't have to one of them.

Got questions about machine learning and AI? Drop us a line!

We couldn't send your message for the following reasons:

Thank you for your message!

DE

DE