Machine learning on a budget

AI for small businesses

by Dr. Tomás Silveira Salles

13 minute read

About the author

Dr. Tomás Silveira Salles

... writes about the most recent IT trends in layman's terms and tries to shed some light on the darkness beyond hype and buzzwords. You can ask him questions through the contact form at the bottom of this article.

Technology, commerce, science, medicine, art and adding moustaches to selfies. Machine learning is becoming a part of almost every aspect of our lives and everybody wants to profit from it. But if you own a small business and want to board this train without having the time or the resources available to your competition, you'll eventually come across a daunting challenge: Collecting data.

Implementing a neural network today is easier than ever before, and so is testing it, tuning it for maximum accuracy and printing statistical data to defend the results when meeting potential investors. Tools like Google's TensorFlow take care of almost all the math and require you to use only a small set of high-level objects and instructions, and your favourite programming language is very likely to be supported already.

However, to train the network you'll need labelled data, which consists of examples of things the program should analyse paired with information it should ideally conclude from them. For example, if you want your program to analyse images and tell whether they are pictures of cats or not, you could provide lots of pictures of cats paired with the label "cat", and lots of pictures of dogs and cars and flowers etc paired with the label "no cat".

This article is a short "guide to data collection" in which we'll try to show that machine learning really is for everyone. Small businesses just have to work harder -- and most importantly smarter -- to compensate for the probably modest database they'll be able to collect. The "smarter" part is where we hope we can help!

In this first half of the guide we'll talk about different methods used in machine learning algorithms, explain when and where they can be most effectively applied and how they differ in their hunger for data. Part two will be concerned with market strategies for products that use machine learning, discussing how they can give startups and small businesses a fighting chance and how they can be coupled with the methods discussed here.

In a hurry? Go straight to the summary...

Take me there!

The sheer amount of data is usually already a problem. Depending on the difficulty of the task and the accuracy that your product needs, you may need thousands of labelled examples, or tens of thousands, or hundreds of thousands. It might already be infeasible for a small business to even get the example data points. Labelling them is then the second part of the challenge.

If you're lucky, labelling each individual example is an easy task and you can get lots and lots of people to help you. Google's reCAPTCHA is an example of massive online collaboration to create labelled data, in part for machine learning, but also for digitizing books and more.

However, if analysing your data requires expertise (for example, classifying pictures of mushrooms into the categories "tasty", "poisonous" and "do not eat at work"), the labelling phase might cost not only more money than you can afford, but also more time, since it has to be done by a small group of people and each individual example takes longer to analyse (and/or has to be checked by more than one expert, for safety).

When we talk about labelled data, we're actually focusing on an area of machine learning called supervised learning. It is called "supervised" because, during training, the labels tell the machine (for each particular example) exactly what the output should be. This is not, however, the only type of machine learning algorithm available, and in many cases not the most suitable.

Unsupervised learning is about finding common patterns in the data, without having a predefined list of patterns to look for.

Suppose you find a huge library on Mars, with thousands of books written in an ancient alien language, using an ancient alien alphabet. Your task is to digitise those books, find out what letters exist in this alphabet, find out what words exist, common phrases etc. Since you don't know the alphabet at the start, there's no way of creating labelled data for the individual letters. Even if you go through a hundred books classifying the letters manually, book number 7498 might have a new letter that you didn't come across. Moreover, and most importantly, the letters don't have "names" at the start, and you don't really care what names are assigned to them. For all you care they can be called "first letter", "second letter" etc. In this case, you'd do better to use unsupervised learning.

Unsupervised learning is about finding common patterns in the data, without having a predefined list of patterns to look for. You can use some robots to scan all the alien books, and some simple program to recognise isolated characters based on surrounding white space, thus creating a huge set of data points (but no labels). Then, using unsupervised learning, a computer could organise the pictures of isolated characters into groups of pictures that seem to follow the same patterns and therefore probably represent the same character. In the end, each resulting group corresponds to a letter in the alien alphabet and you can name the letters however you like. This will automatically label the existing data (since it is already separated into the correct groups), and it will become easy to digitise the books as text files and create lists of all occurring words, common sequences of words etc.

Reinforcement learning is about letting the machine come up with guesses (solutions) and telling it how good each guess was.

For a different example, suppose you decide to build a huge library on Mars, but since you only brought a couple of astronauts with you, you'll have to use drones to carry all the martian bricks. Also, the martians aren't happy about the project, so they keep throwing rocks at the drones as they fly by. You need a computer to pilot each drone and not let it crash, and each drone is equipped with lots of sensors (a gyroscope, an accelerometer, cameras, wind sensors, an altitude sensor, a radar etc). Machine learning can help you here, but there's no way to produce sufficient labelled data. In this case, a data point would consist of a set of values obtained from the multiple sensors on a drone, and a label would be an instruction such as "increase thrust on propeller 3 by 42%". The possible values from the sensors are too many, and you don't even know how to label a single data point, because the physics involved is too complex. In this case, you'd do better to use reinforcement learning.

Reinforcement learning is about letting the machine come up with its own guesses (solutions) for each input and telling it how good each guess was, even though no specific solution is expected. For example, if you don't want your drones to crash, they should avoid coming too close to the ground. So instead of telling the computer exactly what to do with each propeller, just let it guess what to do, and then check whether the drone comes closer to the ground as a result. If it does, the chosen reaction was a bad idea, and the computer should learn from it so this doesn't happen again. This "evaluation" of solutions can be done automatically (using the drone's altitude sensor), so no human input is necessary during the training phase.

But even if none of this helps and you really do need labelled data to solve your problem, hope is not lost! Below we present three important methods that can be seen as advanced variations of supervised learning and can help you get better results even when data is scarce.

In semi-supervised learning, a large set of unlabelled data can be used to improve the machine's accuracy.

You've decided to learn karate and signed up for some classes. During class, your master tells you how to kick and punch and block and jump. Your master shows you the moves, guides your body while you're trying and gives you feedback after each attempt. But because you want to learn fast to beat that bully who broke your girlfriend's radio, you stay behind after class and practise further in front of the mirror, correcting yourself based on what your master has taught you so far. Each class is only one hour long, but afterwards you can train for several hours before the janitor kicks you out.

Training with your master's help corresponds to using labelled data (appropriately called "supervised" training), while training alone corresponds to using unlabelled data (appropriately called "unsupervised" training). Machine learning algorithms can use a similar "mixed" approach, called semi-supervised learning.

In semi-supervised learning, a small set of labelled data can be used for the initial training, and a much larger set of unlabelled data can be used to improve the machine's accuracy or confidence. This can be extremely useful in cases where labelling data is difficult, but there is abundant unlabelled data.

A common example of a successful application of semi-supervised learning is speech recognition. Labelled data in this case consists of audio recordings of syllables, single words or short phrases, together with text transcripts. Of course, big companies interested in speech recognition can spend a lot of money and a lot of time and produce large sets of labelled data, but not nearly as large as the amount of unlabelled data that's already publicly available (e.g. the audio tracks from all films, TV shows and youtube videos). So complementing the initial supervised phase with some unsupervised training is easy and cheap, and can be very helpful.

Of course, unsupervised training for a task that has a specific desired output for each data point can also be dangerous. In the karate example, you may have misunderstood your master about a particular move. If you then practise this move the wrong way for several hours after class, it will actually become harder to fix it in the next lesson than it would be if you hadn't trained by yourself. Similar problems can happen with semi-supervised machine learning, so it's important to check whether the unlabelled data is helping or not.

In active learning, the machine itself carefully selects or creates data points for you to label.

Jane's math exam is next week. Today she had her last class before the exam and her teacher said "tomorrow I'll be in my office for one hour after lunch to answer any questions you might have". Jane is confident in trigonometry and great at solving quadratic equations, but matrix multiplication is still a problem.

It is obvious what she should do during the Q&A: Ask questions about matrix multiplication. She knows what her biggest weakness at the moment is and should focus on that during the limited time she is allowed to ask questions. Why should machines learn differently?

In terms of machine learning this approach is called active learning. Basically, after some supervised learning with a small amount of labelled data, the machine can analyse its weaknesses and ask you to label a few more (carefully chosen) data points which will help it learn as much as possible.

The new data points to be labelled might come from a large set of unlabelled data that you provide from the start, or from an actual application of the program (that is, someone actually uses the trained machine in a real-world case and the input acts as an unlabelled data point), or they might even be created by the machine itself, as if Jane wrote down two matrices and asked her teacher to show her how to multiply them.

There are also several criteria the machine may use to decide which unlabelled data points would be the "most helpful". Common ones are reducing the error rate, reducing uncertainty, and reducing the solution set.

The first one -- reducing the error rate -- is fairly obvious as a general concept (even though the precise meaning of "error rate" can be very complicated).

Reducing uncertainty is less obvious. In most common machine learning algorithms with discrete output -- such as classifying pictures as "cat" or "no cat" -- the algorithm first computes a value that can be in-between, for example "45% cat and 55% no cat", and then returns the closest valid output (in this case "no cat"). However, the fact that 45% and 55% are so close, shows that the machine is somewhat uncertain about the output, and such cases are the ones the machine is most likely to get wrong. Reducing the uncertainty means training the machine further so that this intermediate value separates the possible outputs more clearly, such as "5% cat and 95% no cat".

Finally, reducing the solution set is the least intuitive one. Suppose you're a detective solving a murder case. The police has rounded up 10 suspects found near the crime scene and you're looking at them from behind a one-way mirror together with the only witness, who unfortunately didn't see the murderer's face. With your outstanding observation skills you notice that 5 of the suspects are humans, and the other 5 are martians. By asking the witness whether the attacker's skin was green or not, or by asking whether the attacker had antennae on top of its head or not, you can quickly reduce the number of suspects to just 5, no matter what the answer is. In terms of machine learning, the information you have about the attacker corresponds to the labelled data; the questions you could ask about the attacker correspond to the unlabelled data; and the rounded up suspects correspond to the possible models (ways in which the machine could set its parameters) that fit the currently known information, i.e. such that it gets the right answers at least on the current training data. By asking you to label a well-chosen data point (which corresponds to the detective asking a new question), the machine can narrow down the possibilities for its parameters, hopefully coming closer to the "correct model".

We'll come back to active learning in part 2 of this guide.

Transfer learning is a way to train machines using a small amount of data that is specific to your problem, together with a large amount of data for a similar problem.

The same day you signed up for your karate lessons (because of the bully who broke your girlfriend's radio), this arrogant kid called Bruce signed up as well. The difference between you and Bruce, apart from his arrogance, is that he's a kung fu black belt. Bruce is super fit, flexible, strong and fast, has great reflexes, and already knows how to kick and punch and block and jump, just in a different style. Even if it's hard for you to admit, realistically speaking Bruce will probably learn karate much faster than you. What he's doing is called transfer learning.

Transfer learning is a brilliant way to train machines using a small amount of labelled data that is specific to your problem, together with a large amount of labelled data for a different but similar problem.

In this article published in the Cell journal in February, a group of researchers trained a machine to classify "macular degeneration and diabetic retinopathy using retinal optical coherence tomography images". In other words, they trained a machine to look at (a specific type of) images of retinas and tell whether it had one of a few "common treatable blinding retinal diseases" which have to be diagnosed as early as possible.

Instead of starting from scratch, they took their initial parameters from a neural network that was already trained on tens of millions of labelled data points from ImageNet, a database of images of all sorts of things, labelled with the names of the objects each image depicts. Then, they adapted the network to classify images into their own categories of interest (3 retinal diseases and 1 category for "normal/no disease") and retrained it using only about 100 thousand labelled images of retinas. After 2 hours of training, the machine had already achieved a similar level of accuracy as the 6 experts to whom it was compared. To go even further and prove the usefulness of transfer learning for such applications, they took the original neural network (trained only on the ImageNet data points) and trained it again, but this time using only 1 thousand retinal images from each of their 4 categories. Training took only about 30 minutes and the results -- obviously slightly worse -- were still fairly close to the accuracy of the human experts and produced fewer false positives than one of them.

While it is unlikely that AI will be trusted to replace human doctors anytime soon, it could certainly become a helpful ally, for example to speed up the pre-screening process, eliminate obvious negatives, quickly recognise obvious positives etc. And because of the level of expertise needed to label data points in any area of medicine, this would be almost impossible to achieve without transfer learning.

This was a longish article with a lot of definitions, so a summary is probably welcome. We outlined three learning styles that are in some sense disjoint: supervised, unsupervised and reinforcement learning. After that, we discussed three variations of supervised learning, which can be applied separately or combined in any way: semi-supervised, active and transfer learning. Here are all six methods again, in a nutshell:

➕➖ Unsupervised learning

The training data is unlabelled. This is often used for "clustering" problems, i.e., grouping together things that share common patterns, without previously defining which groups should exist. The example to keep in mind is the classification of the alien characters in the unknown alien alphabet.

➕➖ Reinforcement learning

The training data is unlabelled, but if we give the machine an input data point and it makes a guess of what the output should be, we can easily evaluate the quality of the guess. The example to keep in mind is controlling the drones flying around on Mars, and evaluating the quality of a manoeuvre by the change in altitute (to avoid hitting the ground).

➕➖ Supervised learning

The data points have labels containing the output we hope to get from the program. This can be used to organise things into groups when the groups are already known. In this case the example to keep in mind is the classification of images into the categories "cat" and "no cat". Another common use of supervised learning is when the expected output is a continuous value. For instance, based on sales data for a given product in the last 10 years, estimate how much of it the company should produce next month to maximise profit.

➕➖ Semi-supervised learning

This is the idea of combining unsupervised and supervised learning. Ideal when there is little labelled data available, but a lot of unlabelled data. The example to keep in mind is taking karate lessons with a teacher, and then training further by yourself after class.

➕➖ Active learning

This is a complement to basic supervised learning in which the machine is allowed to carefully choose or carefully design unlabelled data points and ask you to label them, so it can learn faster and better by focusing on its weaknesses. The example to keep in mind is the short Q&A with the mathematics teacher before the exam.

➕➖ Transfer learning

Another complement to supervised training. If a problem similar to yours already has plenty of labelled data available, a machine that has been trained for this similar problem can be adapted and re-trained with labelled data for your specific problem, so it doesn't have to start from scratch. The example to keep in mind is the proficient kung fu fighter who decides to learn karate.

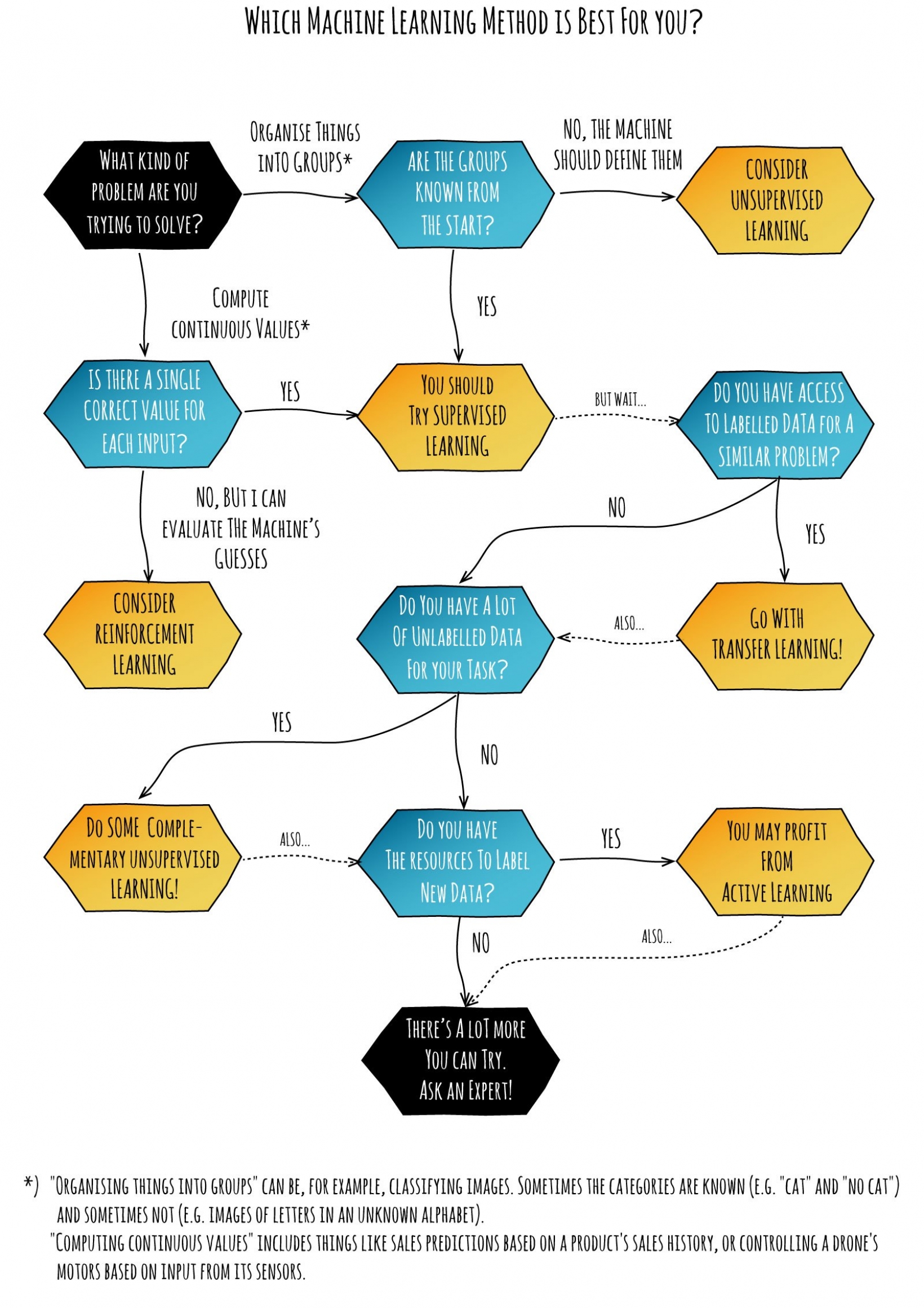

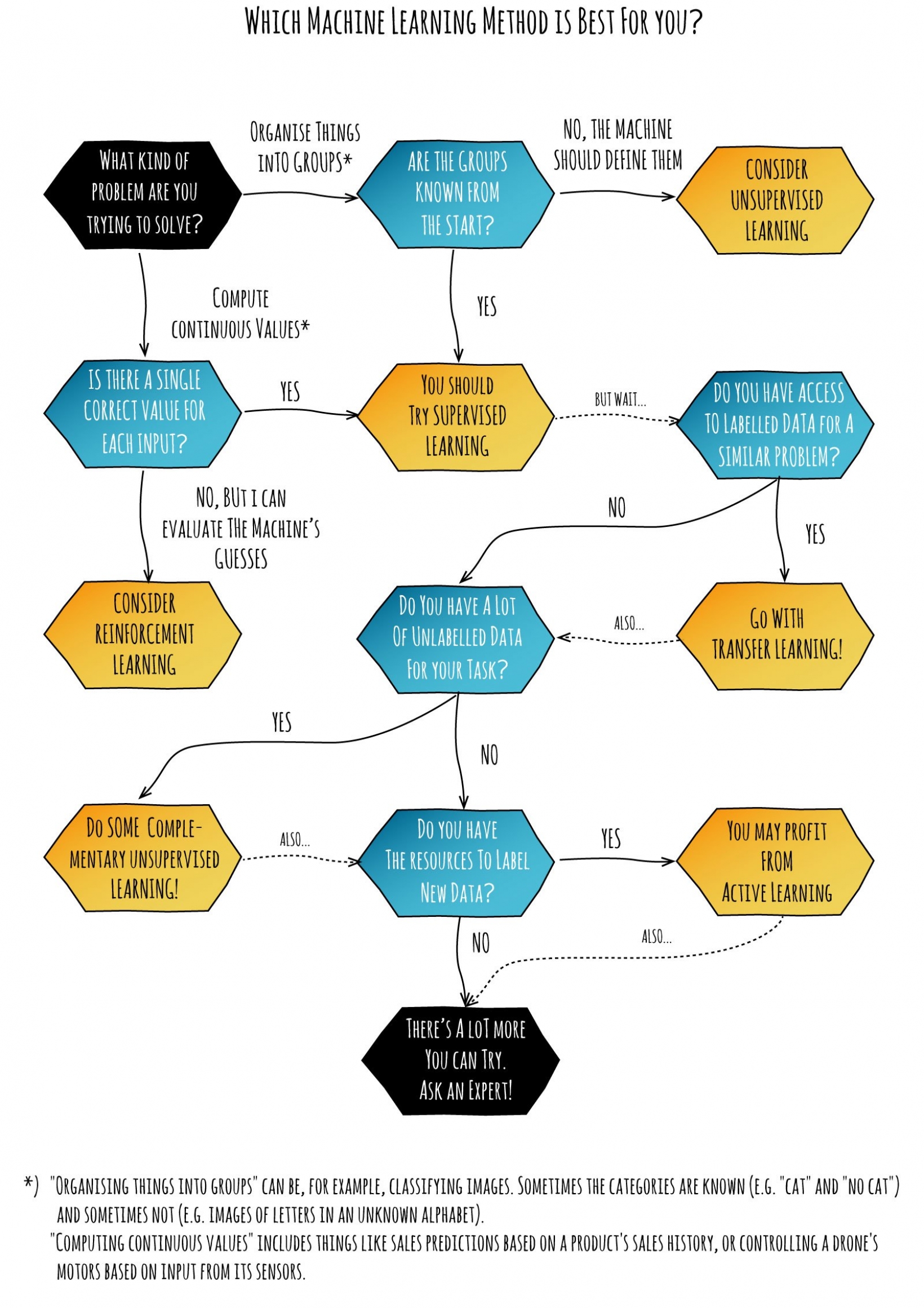

We leave you with a simple flowchart you should now be able to follow:

Flowchart: Which machine learning method is best for you?

Got questions about machine learning and AI? Drop us a line!

We couldn't send your message for the following reasons:

Thank you for your message!

DE

DE